Google and Apple have introduced AR platforms as well.īut while those companies might have more resources, Snap got off to an early start. Facebook opened up its AR platform to developers on Tuesday, after introducing it at F8 earlier this year. It’s a bid to make Snapchat the default place for flashy, viral AR experiences - think Dancing Hot Dog - at a time when deeper-pocketed rivals are circling. “This has been an amazing year for AR as a technology.” A scripting API allows users to build additional effects into their designs - tapping an object to change its shape, for example, or altering it as the user walks closer to it. Lens Studio will offer them templates and guides for getting started, for both two- and three-dimensional designs. The move marks the first time that average users - and advertisers - will be able to create AR lenses for Snapchat. “We’re really excited to take this tool, and make it as simple as possible for any creator out there to have a presence on Snapchat.” “This has been an amazing year for AR as a technology,” says Eitan Pilipski, who leads Snap’s camera platform team. A flood of new AR objects inside Snapchat could be a creative boon for the app, where 70 million users a day interact with lenses. The app, which resembles the internal tools Snap uses to build lenses in Snapchat, can be downloaded here.

#Snapml lens studio for mac#

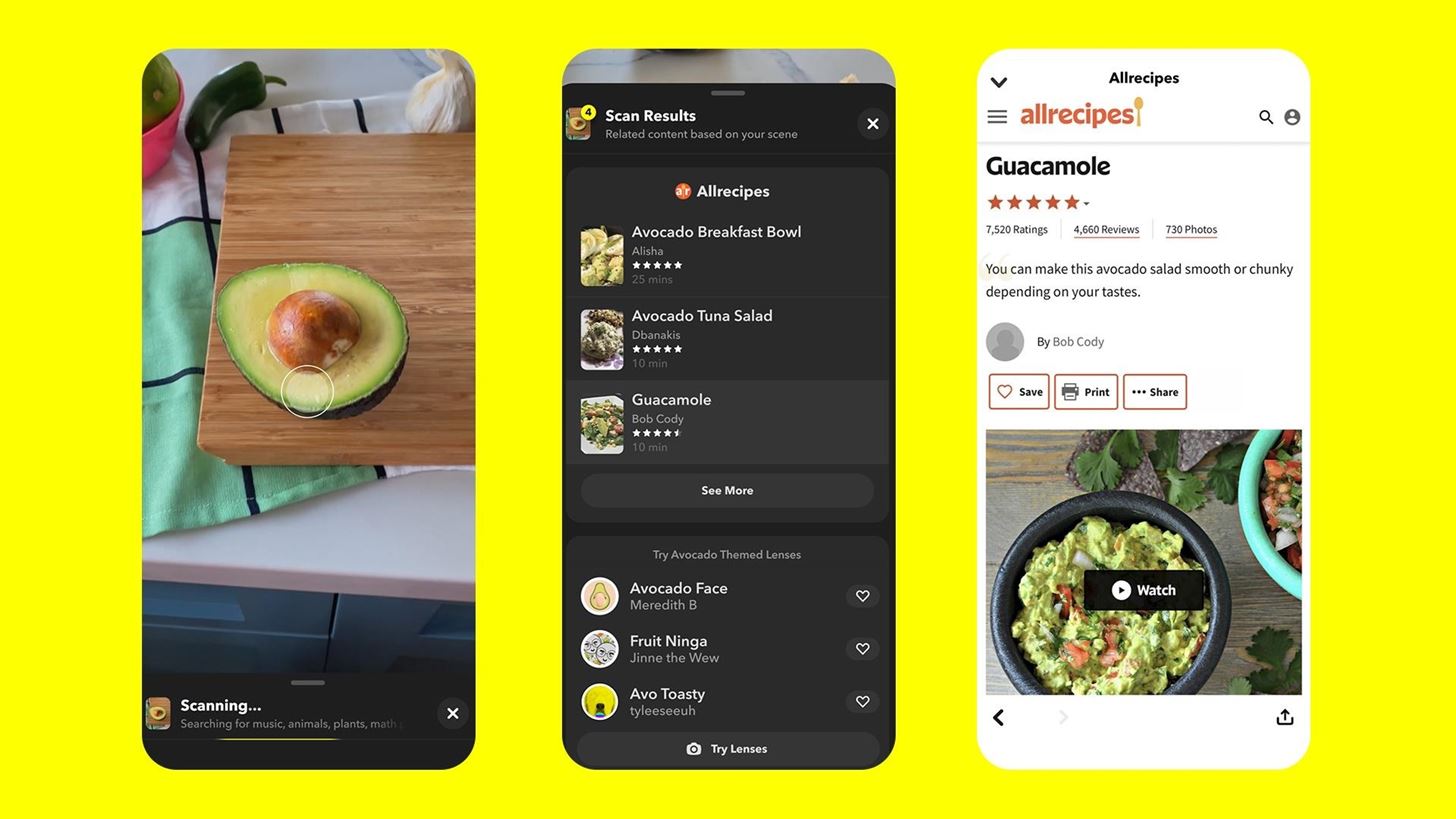

The company today announced Lens Studio, a design app for Mac and Windows desktop computers.

the scene), and the component to provide the Lens the output (the altered style).Snap’s second official app is a tool that lets you design and build augmented reality lenses for Snapchat. This means I'll have to adjust these textures in order to allow the camera to provide the model input (i.e. Note that, upon adding the style transfer model to the ML Component, I don't have anything set in terms of the input and output Textures. This seems to allow the Component to communicate with this primary camera: Second, the ML Component configuration. First, note that the ML Component is automatically nested under the Camera Object in the top left panel. Parts of the above gif are difficult to make out, and I want to point you to a couple of different things here, as I prepare to try to hook up the two ML tasks. Fritz AI models have these inputs and outputs generically configured to be compatible with Lens Studio, but there are some use cases where experimentation might make sense (a bit more on that later)-so for simplicity's sake, I'll leave everything as is and click "Import".

Upon selecting this model, a Model Import dialogue box will open that allows you to modify model inputs and outputs. onnx file I downloaded in the previous step. Upon selection, a Finder window pops up (I'm using a MacBook)-this is where I'll select the. Adding an ML Component Object To add an ML Component, I simply click the '+' icon in the Objects panel (top left) and either scroll down to or search for ML Component. The first step here is to add a new ML Component Object that will contain this model. So what I need to do here, ins essence, is replace the heart AR effect with the output of my style transfer model. Note that, by default, the template opens with the Segmentation Controller (a script, essentially) opened in the Inspector on the right hand side. And with that separation, we have a very simple heart AR effect currently occupying the background.

As we can clearly see here, this template already has the human subject separated (or segmented) from the background of the scene.

0 kommentar(er)

0 kommentar(er)